The next frontier of AI will need to move beyond the digital realm into the physical world. Our group is developing AI to sense, understand, and interact with physical signatures like humans can. New modalities we are exploring for spatial understanding include 3D and 4D perception (PAGE-4D), as well as the correspondences between spatial audio and video (Schrodinger Audio-Visual Editor, REGen).

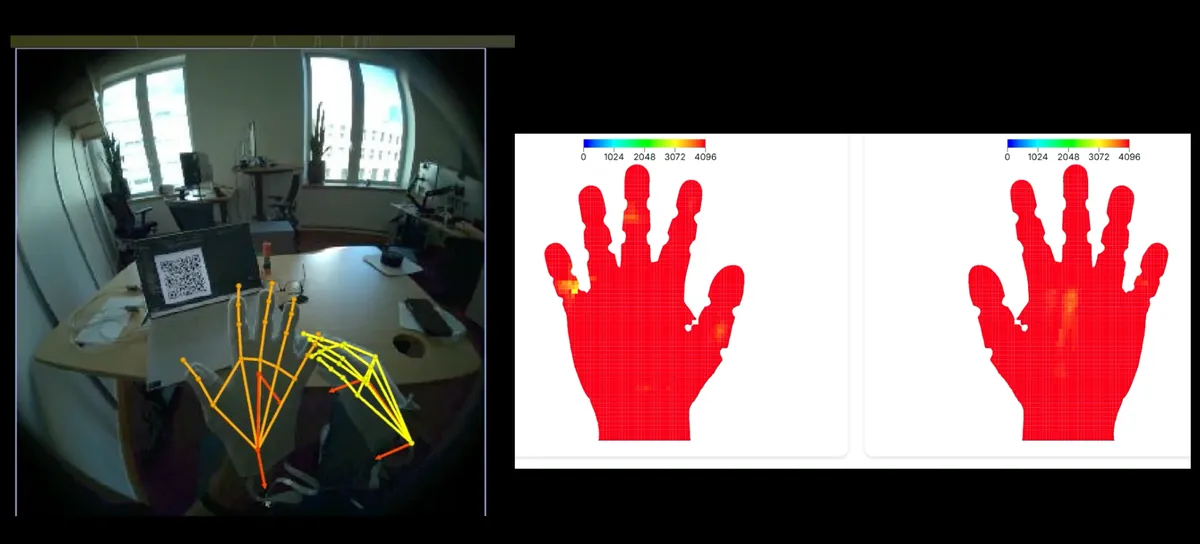

In addition to vision, the sense of touch is critical to physical interaction, and we are prototyping cheap and personalized resistive tactile sensing gloves (Fits like a Flex-Glove) that can capture the human sense of touch. OpenTouch is our latest milestone - the first in-the-wild full-hand tactile dataset paired with egocentric vision, advancing AI for multimodal egocentric perception of vision, touch, and pose, embodied interaction, and contact-rich robotic manipulation.

Our group is also equipping AI with the sense of smell. AI for smell can enhance entertainment, gaming, and marketing, for quality control in the chemical and manufacturing industries, help in early disease detection (e.g., COVID-19), and even ‘smelling’ hormones and indicators of emotional states, stress, and early prognosis of cancer. We recently released SmellNet, the first large-scale dataset of real-world smells collected using portable gas sensors across 50 substances (nuts, spices, herbs, fruits, and vegetables) with 50 hours of data, and are actively pushing towards high-resolution detection and transmission of smells.

In the long term, our vision is to develop multisensory world models that are grounded in physical sensing and interaction, interact seamlessly and controllably with users, and are used positively to enhance creativity and productivity in the physical world.

Key works:

OpenTouch: Bringing Full-Hand Touch to Real-World Interaction, arXiv 2025

SmellNet: A Large-scale Dataset for Real-world Smell Recognition, arXiv 2025

Schrodinger Audio-Visual Editor: Object-Level Audiovisual Removal, arXiv 2025

Page-4D: Disentangled Pose and Geometry Estimation for 4D Perception, arXiv 2025