The next generation of foundation models will be multisensory, being able to process, reason, and interact over modalities such as text, images, video, speech, time-series, sensors, and more. Our group leads today’s research in curating large-scale multimodal datasets to train and evaluate foundation models, developing effective and efficient training paradigms, enhancing reasoning to solve complex multimodal problems, and developing interactive multimodal agents for multi-turn problems.

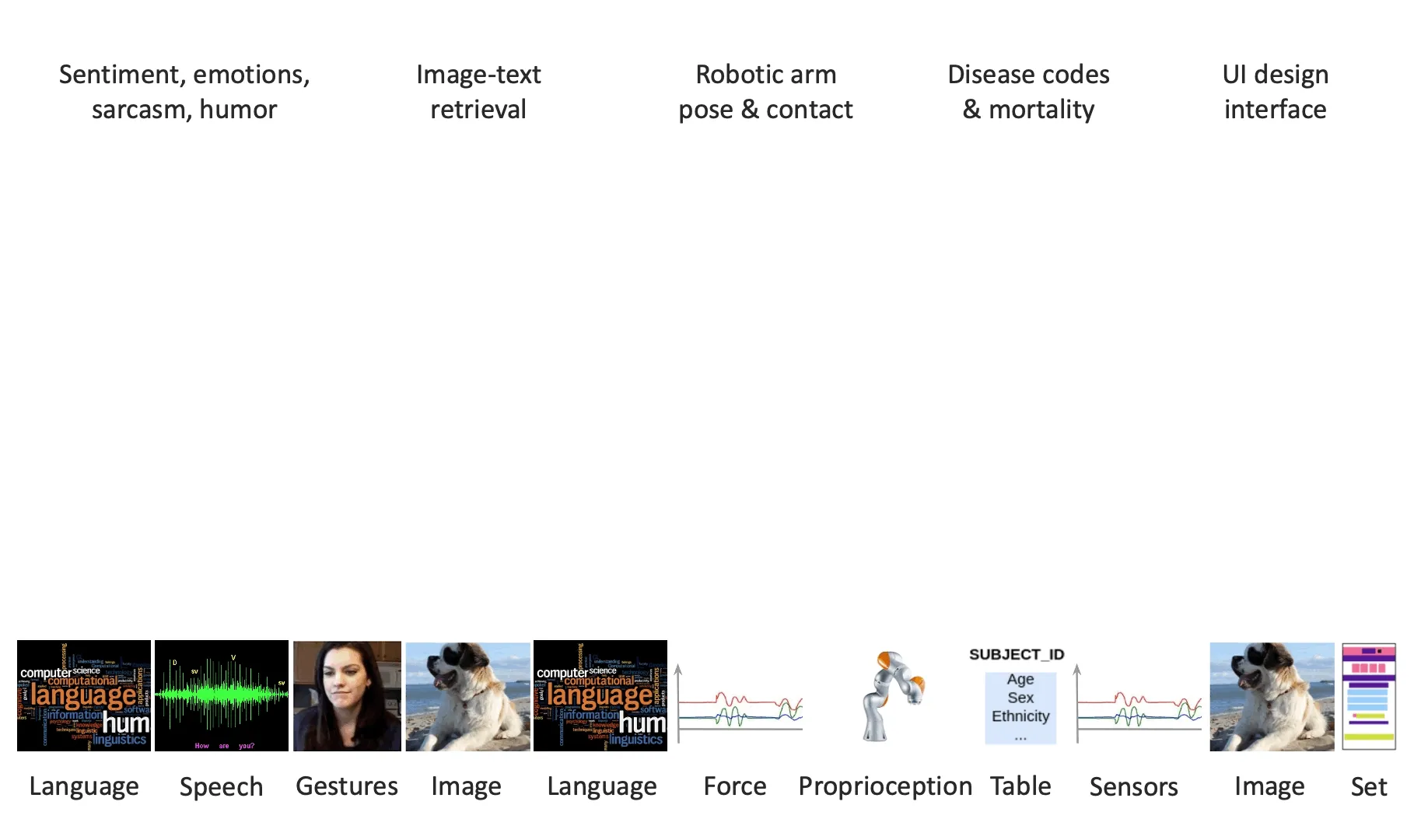

We have built a series of models to learn from multimodal sequences such as dialog, speech, videos, medical time series, and physical sensors. While prior work often summarized temporal modalities into static features for fusion, we developed new methods for temporal fusion at the fine-grained level. These were among the first cross-modal attention mechanisms and Multimodal Transformer architectures now widely used in today’s foundation models. Our work in high-modality models (HighMMT) further paved the way towards generalist multimodal intelligence that scales across an unprecedented range of modalities and skills, while robustly handling missing modalities. Finally, holistic benchmarks are key to training and rigorously evaluating progress in multisensory foundation models, our HEMM and MultiBench benchmarks are widely used for this purpose.

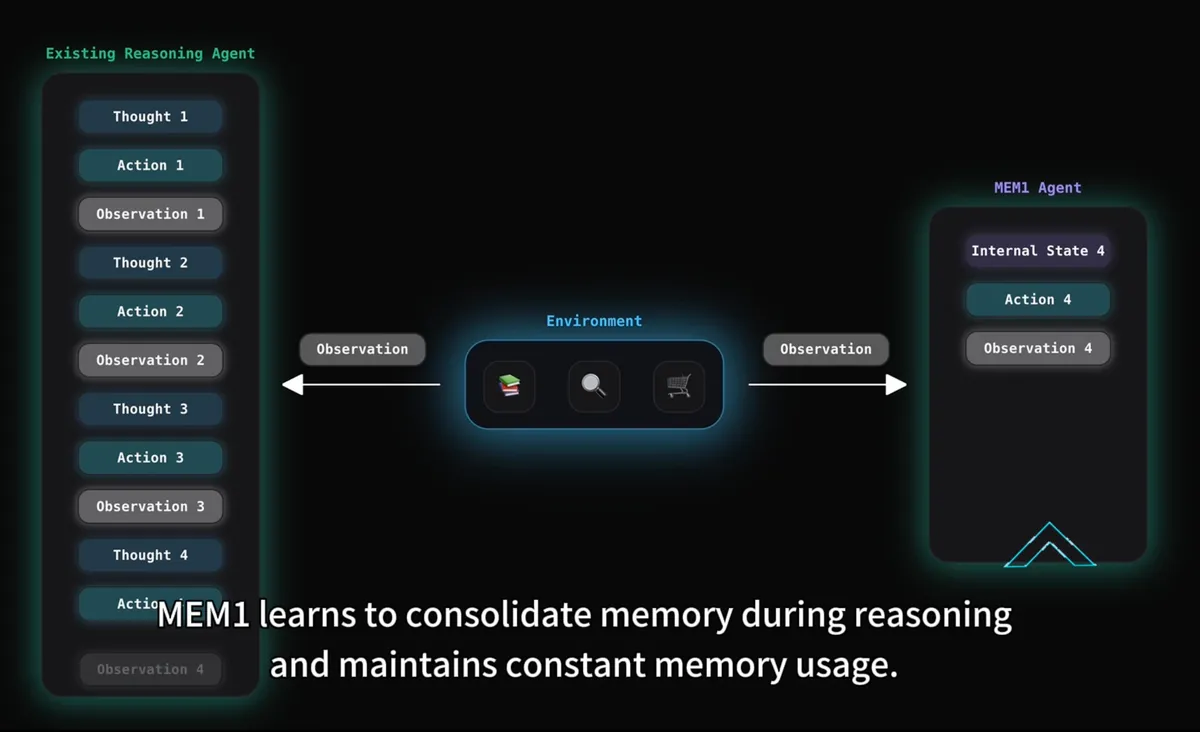

Today, we are particularly interested in foundation models bridging discrete text and high-dimensional continuous temporal data (e.g., medical sensors and time-series) and improving the Pareto-frontier of cross-modal generalization and efficiency with better multimodal interaction-aware experts and task grouping mechanisms (Time-MoE, MINT, MMoE). Beyond prediction, we enhance these foundation models with multimodal reasoning and agentic interaction capabilities. These interactive systems can tackle complex problems using logical, mathematical, and systematic reasoning across diverse data sources and external knowledge, while ensuring their decision-making process remains robust and transparent to human collaborators. Our group is developing new memory mechanisms for long-horizon reasoning agents, creating new environments to train and evaluate multimodal agents, and applying them to real-world problems in healthcare, education, and the workplace.

Key works:

MEM1: Learning to Synergize Memory and Reasoning for Efficient Long-Horizon Agents, arXiv 2025

PuzzleWorld: A Benchmark for Multimodal, Open-Ended Reasoning in Puzzlehunts, arXiv 2025

Guiding Mixture-of-Experts with Temporal Multimodal Interactions, arXiv 2025

MINT: Multimodal Instruction Tuning with Multimodal Interaction Grouping, arXiv 2025

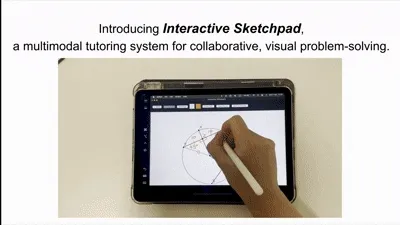

Interactive Sketchpad is an AI-powered tutoring system that integrates step-by-step reasoning with interactive, AI-generated visualizations, enabling students to learn math and science through shared sketch-based collaboration. Built on a large multimodal model, it demonstrates how visual reasoning and interaction can make AI tutoring more engaging, natural, and effective, pointing toward richer multimodal human–AI learning experiences.

Progressive Compositionality in Text-to-Image Generative Models, ICLR 2025 spotlight

MMoE: Enhancing Multimodal Models with Mixtures of Multimodal Interaction Experts, EMNLP 2024

HEMM: Holistic Evaluation of Multimodal Foundation Models, NeurIPS 2024

MultiBench: Multiscale Benchmarks for Multimodal Representation Learning, NeurIPS 2021

Multimodal Transformer for Unaligned Multimodal Language Sequences, ACL 2019